- getting started

Introduction: Cloud

Frequently Asked Questions

Telemetry

Testsigma Terminology

Testsigma Sample Applications

Command Center

- collaboration

Invite Team Members

Assign Projects

Users & Role Management

Review Management [Test Cases]

Review Management [Elements]

Execution Controls

Features & Scenarios

Manage Test Cases

Test Case List Actions

Import Test Project Test Cases

Importing Postman Collections and Environments

Update Test Case Result in a Test Plan

Test Cases (Mobile Web App)

Custom Fields for Test Cases

Label Management

- Test Step Types

Type: Natural Language

Type: REST API

Type: Step Group

Type: For Loop

Type: While Loop

Type: Block

Type: If Condition

Nested Step Groups

Image Injection

Cross-application testing

- Test Data Types

Raw

Parameter

Runtime

Random

Data Generator

Phone Number

Mail Box

Environment

Concat Test Data

Create Test Data Profile

Update Test Data Profile

Updating Value in TDP

Import TDP

Bulk Deletion of a Test Data Profile

Create Test Data [Environment]

- data generators

Default Test Data Generators

Address Function Type

ChangeDataType Function Type

Company Function Type

DateFunctions Function Type

DomainFunctions Function Type

EmailFunctions Function Type

File Function Type

Friends Function Type

IdNumber Function Type

Internet Function Type

MailboxAliasFunctions Function Type

MailboxFunctions Function Type

Name Function Type

NameFunctions Function Type

Number Function Type

NumberFunctions Function Type

Phone Number Function Type

PhoneNumberFunctions Function Type

Random String Function Type

RandomText Function Type

StringFunctions Function Type

TestDataFromProfile Function Type

- Elements (Objects)

- Web Applications

Record Single Element

Record Multiple Elements

Create Elements

Supported Locator Types

Formulating Elements

Shadow DOM Elements

Verifying elements in Chrome DevTools

Handling iframe Elements?

Create Image Based Elements

Dynamic Locators using Parameter

Dynamic Locators using Runtime

Using Environment Test Data for Dynamic Locators

Locating Dynamic Elements in Date Widget

Freeze & Inspect Dynamic Elements (WebPage)

Locating Dynamic Elements in Tables

Import/Export Elements

AI Enabled Auto-Healing

Locator Precedence (Web Apps)

Verify Elements from Test Recorder

- test step recorder

Install Chrome Extension

Install Firefox Extension

Install Edge Extension

Exclude Attributes/Classes

- test plans

Add, Edit, Delete Test Machines

Add, Edit, Delete Test Suites

Schedule Test Plans

Run Test Suites In Parallel

Cross Browser Testing

Distributed Testing

Headless Testing

Test Lab Types

Disabling Test Cases in Test Plans

AfterTest Case

Post Plan Hook

AfterTest Suite

Email Configuration in Test Plan

Execute Partial Test Plans via API

- runs

Ad-hoc Run

Test Plan Executions

Dry Runs on Local Devices

Run Tests on Private Grid

Run Tests on Vendor Platforms

Run Test Plans on Local Devices

Test Locally Hosted Applications

Debug Test Case Failures

Parallel and Allowed queues

- live editor

Introduction: Testsigma Terminal

Installing Testsigma Terminal

Editing a Test Case Using Live Editor

FAQs on Testsigma Terminal

- Testsigma Agent

Pre-requisites

Setup: Windows, Mac, Linux

Setup: Android Local Devices

Setting up iOS Local Devices

Update Agent Manually

Update Drivers Manually

Delete Corrupted Agent

Delete Agents: Soft & Permanent

Triggering Tests on Local Devices

- troubleshooting

Agent - Startup and Registration Errors

Agent Logs

Upgrade Testsigma Agent Automatically

Specify Max Sessions for Agents

Testsigma Agent - FAQs

- continuous integration

Test Plan Details

REST API (Generic)

Jenkins

Azure DevOps

AWS DevOps

AWS Lambda

Circle CI

Bamboo CI

Travis CI

CodeShip CI

Shell Script(Generic)

Bitrise CI

GitHub CI/CD

Bitbucket CI/CD

GitLab CI/CD

Copado CI/CD

Gearset CI/CD

- desired capabilities

Most Common Desired Capabilities

Browser Console Debug Logs

Geolocation Emulation

Bypass Unsafe Download Prompt

Geolocation for Chrome & Firefox

Custom User Profile in Chrome

Emulate Mobile Devices (Chrome)

Add Chrome Extension

Network Throttling

Network Logs

Biometric Authentication

Enable App Resigning in iOS

Enable Capturing Screenshots (Android & iOS)

Configure Android WebViews

Incognito/Private mode

Set Google Play Store Credentials

Basic Authentication [Safari]

- addons

What is an Addon?

Addons Community Marketplace

Install and Use Community Addons in Testsigma

Prerequisites for creating add-ons

Create an Addon

Update Addon

Addon Types

Create a Post Plan Hook add-on in Testsigma

Create OCR Text Extraction Addon

- configuration

API Keys

- Security(SSO)

Setting Up Google Single Sign-On(SSO) Login in Testsigma

Setting Up Okta Single Sign-On Integration with SAML Login in Testsigma

Setting up SAML-based SSO login for Testsigma in Azure

iOS Settings

Create WDA File

SMTP Configuration

Manage Access

- uploads

Upload Files

Upload Android and iOS Apps

How to generate mobile builds for Android/iOS applications?

- Testsigma REST APIs

Manage Environments

Elements

Test Plans

Upload Files

Get Project Wide Information

Upload & Update Test Data Profile

Fetch Test Results (All Levels)

Trigger Multiple Test Plans

Trigger Test Plans Remotely & Wait Until Completion

Run the Same Test Plan Multiple Times in Parallel

Schedule, Update & Delete a Test Plan Using API

Update Test Case Results Using API

Create and update values of Test Data Profile using REST API

Rerun Test Cases from Run Results using API

Salesforce Metadata Refresh Using API

- open source dev environment setup

macOS and IntelliJ Community Edition

macOS and IntelliJ Ultimate Edition

Windows and IntelliJ Ultimate Edition

Setup Dev Environment [Addons]

- NLPs

Retrieve Value in Text Element

Capture Dropdown Elements

Unable to Select Radiobutton

Unable to Click Checkbox

Clearing the Session or Cookies

UI Identifier NLP

Drag & Drop NLP

Uploading Files NLP

Use MySQL Addon in NLPs

- setup

Server Docker Deployment Errors

Secured Business Application Support

Troubleshooting Restricted Access to Testsigma

Why mobile device not displayed in Testsigma Mobile Test Recorder?

Unable to Create New Test Session

Agent Startup Failure Due to Used Ports

Tests Permanently Queued in Local Executions

Fix Testsigma Agent Registration Failures

Testsigma Agent Cleanup

Need of Apache Tomcat for Testsigma Agent

- web apps

URL not accessible

Test Queued for a Long Time

Issues with UI Identifiers

Missing Elements in Recorder

Collecting HAR File

Errors with Browser Session

Page Loading Issues

- mobile apps

Failed to Start Mobile Test Recorder

Troubleshooting “Failed to perform action Mobile Test Recorder” error

Why Test Execution State is Queued for a Long Time?

Why Mobile App Keeps Stopping After Successful Launch?

More pre-requisite settings

Why am I not able to start WDA Process on iPhone?

What are the Most Common causes for Click/Tap NLP Failure?

How to Find App Package & Activity in Android?

Cross-environment Compatible ID Locators (Android)

Why Accessibility IDs Over other Locators?

What are Common Android Issues & Proposed Solutions?

How to Find the App Bundle ID for iOS?

Developer Mode (iOS 16 & Above)

How to Handle iOS App Compatibility Issues?

How to Disable Play Protect for SMS Forwarder Installation?

How to Capture Network Logs in an Android Application?

- web apps

Why Install Chrome Extension?

Steps to Test Locally Hosted Apps

How to Turnoff Onboading Tutorial?

Non-Text Keys in Test Cases

Basic Authentication for Web

Why Bypass CAPTCHA?

Test Case Failures Due to Link Text Capture Issues

Why Is a Single Video for All Test Cases in a Test Plan Unavailable in Some Cases?

- accessibility testing

Accessibility Testing for Web Applications

Accessibility Testing for Android & iOS

- salesforce testing

Intro: Testsigma for Salesforce Testing

Creating a Connected App

Creating a Salesforce Project

Test Salesforce Connection Before Authorization

Creating Metadata Connections

Adding User Connections

Build Test Cases: Manual+Live

Salesforce Element Repositories

Intro: Testsigma Special NLPs

SOQL as Test Step

Query Builder for Salesforce Testing

Automating Listview Table NLPs

Error Handling On Metadata Refresh

Introduction: SAP Automation

Selective Element Recorder

Batch Element Recorder

Creating Test Cases for SAP Automation

List of Actions

- desktop automation

Introduction: Desktop Automation

Create Projects and Applications

Selective Element Recorder

Batch Element Recorder

Creating Test Cases for Desktop Automation

Intro: Windows Automation (Lite)

Windows (Lite) Project & Application

Element Learning with Test Recorder

Test Cases for Windows (Lite)

- GenAI Capabilities

Testsigma Copilot

Generate TDP Using Copilot

Generate Tests from User Actions

Generate Tests from Requirements (Jira)

Generate API Test Cases (Swagger)

Generate Tests from Figma Designs

Testsigma Copilot for Mobile Apps

Agentic Test Generation with GitHub

Intro: Windows Desktop Automation

Windows (Adv) Project & Application

Object Learning (Using UFT One)

Converting TSR Files to TSRx

Importing/Updating TSRx Files

Test Cases for Windows Automation

Error Handling Post TSRx File Update

- testsigma tunnel

Intro: Testsigma Tunnel

Components & Requirements

Setup and Installation: Mac

Setup and Installation: Windows

Setup and Installation: Linux

Setup and Installation: Docker

Using Testsigma Tunnel

- open source docs

Intro: Testsigma Community Edition

Testsigma Community Cloud

Testsigma Docker Setup

Reset Password

Testsigma Automation Standards and Best Practices

Testsigma Automation Standards emphasise the reusability of automated test cases to enhance the testing process and maximise efficiency. Quality engineers can accelerate the overall testing process by leveraging this reusability. Successful implementation requires a solid understanding of test automation best practices, which enable the setup of repetitive, thorough, and data-intensive tests. These best practices ensure reliable and accurate results while optimising testing efforts.

Test Case Structure and Execution

- Write small, atomic, independent, and autonomous test cases to focus, modularise, and maintain them easily.

- Use Soft Assertions wherever possible. Soft assertions allow test execution to continue even if a verification step fails and provide more comprehensive test results.

- Use Dynamic Waits to improve test efficiency and reduce the chances of false positives or negatives in test results.

- You should structure your test cases in the AAA pattern with three distinct sections: Arrange, Act, and Assert. In the arranged section, you set the preconditions for the test. In the act section, you perform the tested actions; in the assert section, you verify the expected outcomes.

Assertions and Verifications

- You define the expected outcomes of automated test cases and specify the validations to be performed at specific points in time as verifications to understand the concept of assertions.

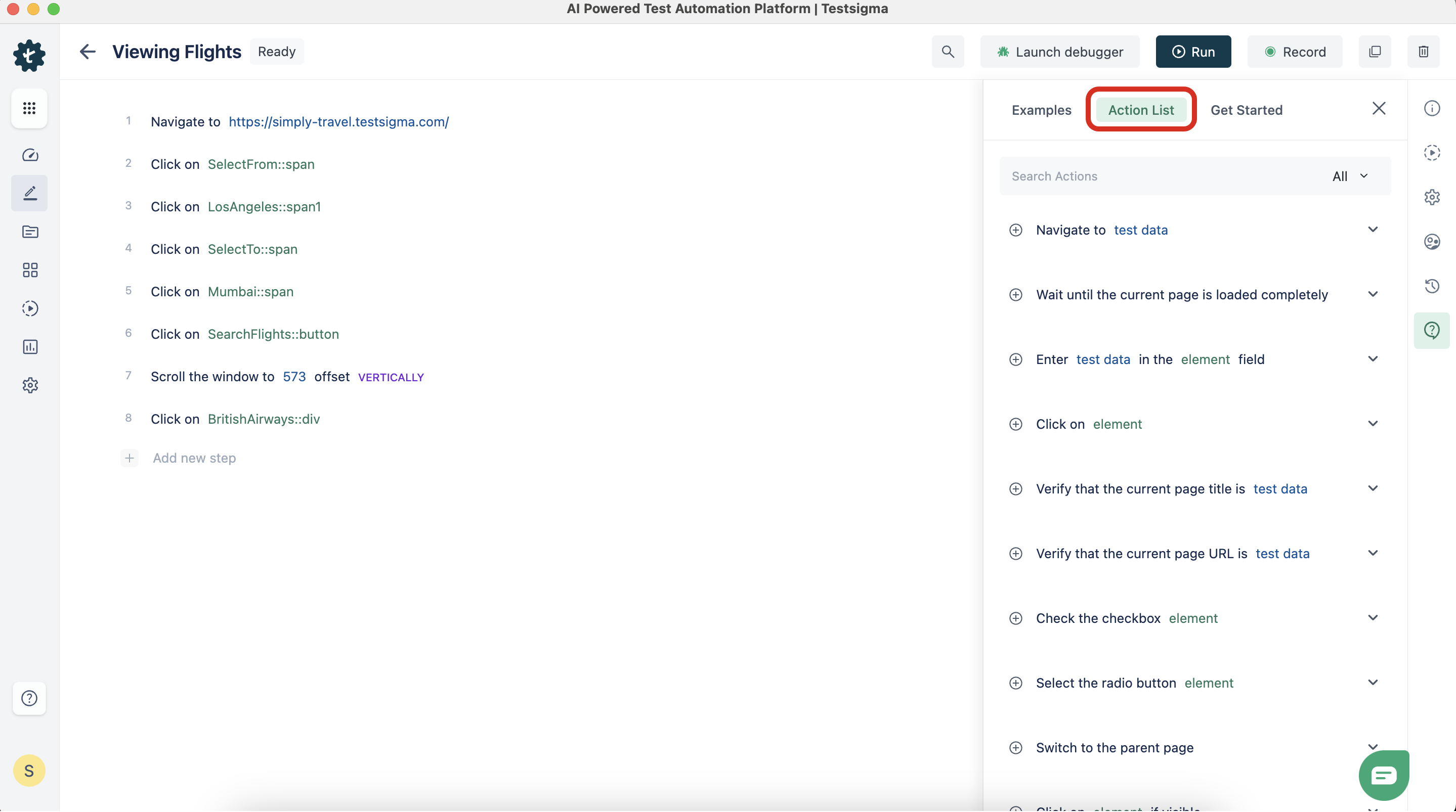

- Navigate to Help > Actions List on the Test Case details page to find NLPs with assertions in Testsigma.

- A failed verification in a test case marks the overall test case as failed by default. If validation fails, the remaining test steps will be skipped, and the test case execution will be aborted.

-

To implement soft assertions for scenarios that require execution of remaining steps after a test step failure, follow the steps below and for more information, refer to test step settings:

- Hover over the test step, click Option, and choose Step Settings from the dropdown.

- Uncheck Stop Test Case execution on Test Step failure and click Update.

- You can configure specific steps to continue executing even if verification fails.

Test Case Organization and Management

- Filter, segment, and organise test cases for easy identification to streamline test management processes and quickly locate specific tests.

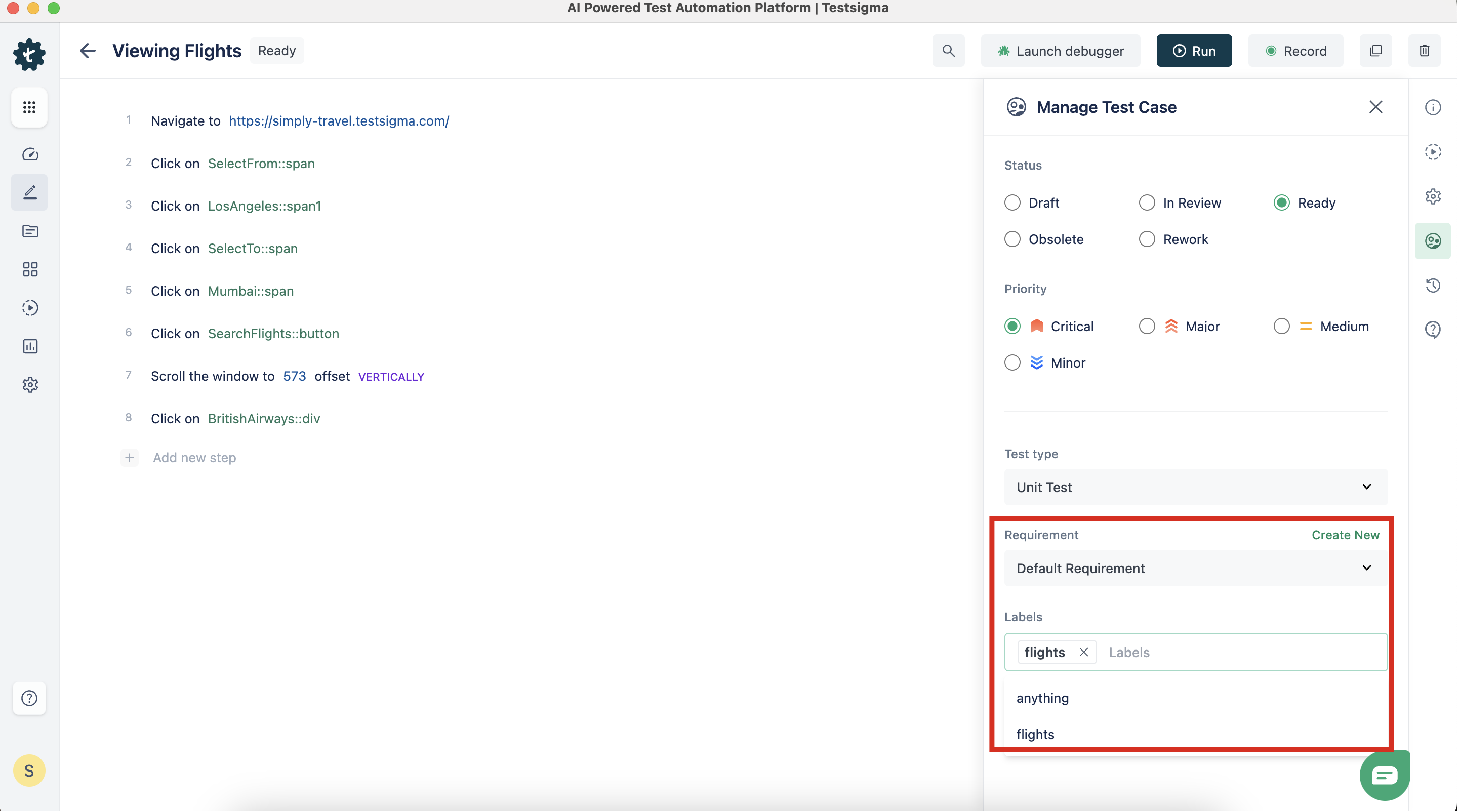

- Label or map relevant requirements to test cases to facilitate filtering and improve accessibility. Users can filter and save test cases in separate views based on labelled or mapped requirements.

- During test case creation or editing, you can add labels. The label field is available by default in the test case.

- You can Save Filters to quickly access and manage test cases associated with a particular functionality or scenario, such as those related to login. For more information, refer to save test case filter.

Customisation and Extensibility

- You can use add-ons to extend Testsigma's repository of actions and create custom NLPs for specific actions that are not available in the built-in Actions List.

- Share your add-ons or leverage existing ones with the test automation community through the Add-ons Community Marketplace. You can use add-ons to provide additional functionality and expand the capabilities of Testsigma. For more information, refer to create an add-on.

You create an add-on for verifying text from two DOM elements.

Reusability and Modularity

- To avoid duplication and simplify test maintenance, use Step Groups as common reusable functions across test cases. Step Groups promote modular test design and easy maintenance by separating reusable components from the test flow. Any changes made to a Step Group will be reflected in all test cases that invoke it. For more information, refer to step sroups.

Create a Step Group to reuse login functionality in multiple test cases.

- Use REST API Steps to automate redundant UI actions. Performing these actions through REST API steps will improve test stability and reduce test execution time compared to using the UI. For more information, refer to Rest API.

Element Management

- Create elements with proper naming conventions to enable reuse in multiple test cases. For more information, refer to create an element.

Use descriptive names such as "UsernameInput" or "LoginButton" to make them easy to identify.

- You should map appropriate context details when you create elements inside iFrames or Shadow DOM contexts. Mapping context details will ensure you correctly identify and interact with elements within specific contexts. For more information refer to Shadow DOM Element. For more information, refer to create a shadow DOM element.

- You can easily access elements by saving filters and creating views based on screen names. They can check for the presence of elements in Testsigma's repository before recreating them. Element management is facilitated by adding filters. For more information, refer to save element filters.

Create a view that displays elements related to the ''Login'' screen for quick reference.

Variables and Scopes

| Scope | Description | Usage |

|---|---|---|

| Environment | ||

| Runtime | The values are the same throughout a sequential test run; other tests can update them. For more information, refer to runtime variable. | |

| Test Data Profile |

Data-Driven Testing

- Enable the data-driven toggle in test cases and use Test Data Profiles to perform the same action with different test data sets for data-driven testing. For more information, refer to data driven testing.

- Test Data Profiles use key-value pair format to store project configuration data, database connection details, and project settings for easy access and reuse of test data.

Create a Test Data Profile named "ConfigData" to store configuration-related test data.

- Linking test cases to test data profiles and data sets using the @ parameter test-data type in NLP allows you to use specific columns from the test data set in your test steps.

Link login credentials to a test data profile and use it to test different user logins in a test case.

Test Data Types

| Data Type | Usage | Examples |

|---|---|---|

| Plain Text | Used for storing general textual data. | “Hello World", “Test123” |

| @ Parameter | Dynamically changeable values in a test case. | @ username, @ password |

| $ Runtime | Values assigned/updated during test execution. | $ name, $ currenttime |

| * Environment | Stores information about the current environment. | * url, * website |

| ~ Random | Generates random values within specified constraints. | Random item from a list |

| ! Data Generator | Generates test data based on predefined rules. | ! TestDataFromProfile :: getTestDataBySetName |

| % Phone Number | Stores phone numbers | % +123456789 |

| & Mail Box | Stores email addresses. | & automation@name.testsigma.com |

Configuration for Test Execution

- Upload attachments for test steps in Test Data > Uploads and follow the maximum file size limit of 1024 MB. The system always considers the latest version of the uploaded file. For more information, refer to uploads.

- Configure Desired Capabilities for cross-browser testing with specific browser configurations. You can configure Desired Capabilities for ad-hoc runs and test plans. For more information, refer to desired capabilities.

Specify the desired capabilities of the targeted testing, such as browser version or device type.

- Ensure you put test cases in the Ready state before adding them to a Test Suite. Organise relevant tests into test suites for better organisation and execution. For more information, refer to test suites.

Create a "Login Suite" and add all relevant login-related test cases for efficient execution.

Execution and Test Plan Run

- Run test case and test plan in Headless mode to reduce execution time and eliminate element loading time. For more information, refer to headless browser testing.

To achieve faster test execution, execute the test plan without a visible browser.

- Use the Partial Run option in the Test Plan to exclude consistently failing test suites from runs; you can exclude or disable tests for execution from the Test Machines & Suites Selection in the Test Plan. For more information, refer to partial run.

- Use the Schedule feature to run the test plan automatically without manual intervention. For more information, refer to schedule a test plan.

Schedule unattended testing during non-business hours by executing the test plan.

Testsigma Recorder Extension

- Use the Testsigma Recorder Extension to record user interactions on web applications. Customise and modify the recorded test steps to align with the desired test case behaviour. For more information, refer to recording test steps.

- Use the Automatic Element Identification feature of the recorder extension to easily capture elements and apply validations and verifications during recording to ensure that test steps include necessary assertions.

Third-Party Integration

Avoid relying on third-party UI elements for UI actions and instead use APIs or a mock server to simulate actual scenarios in the Application Under Test (AUT). This reduces the fragility of tests.