Prompt Templates for Pro-level test cases

Get prompt-engineered templates that turn requirements into structured test cases, edge cases, and negatives fast every time.

Imagine trying to order anything from an e-commerce website. You notice that the application is taking a long time to load the page fully. Also, putting in a single order takes forever. Will you use it again in the future? Do you even refer to this site to relatives or friends? I got the answer. So, you can see how being bug-free alone cannot be used to determine the quality of software/applications, right? We’re getting there slowly. To be a highly available application, it must work properly and be thoroughly tested. In this article, we’ll go over the fundamentals of performance testing, including what it is and some commonly used buzzwords and methodologies.

Table Of Contents

- 1 What is Performance Testing?

- 2 Performance Testing Example

- 3 Why Performance Testing is Necessary?

- 4 Objectives of Performance Testing

- 5 What are the Characteristics of Effective Performance Testing?

- 6 Real-World Examples of Performance Testing

- 7 When is the Right Time to Conduct Performance Testing?

- 8 Performance Testing Life Cycle

- 9 Test Cases for Performance Testing

- 10 Why Automate Performance Testing?

- 11 What Does Performance Testing Measure? – Attributes and Metrics

- 12 Types of Performance Testing

- 13 Cloud Performance Testing

- 14 Advantages of Performance Testing

- 15 Disadvantages of Performance Testing

- 16 How to Develop a Successful Performance Test Plan?

- 17 How to Do Performance Testing?

- 18 Best Practices for Implementing Performance Testing

- 19 Tips for Performance Testing

- 20 Performance Testing Challenges

- 21 Conclusion

- 22 Frequently Asked Questions

- 22.1 How to Automate Performance Testing Using JMeter?

- 22.2 What is the difference between load testing and performance testing?

- 22.3 Is Performance Testing the Same as Functional Testing?

- 22.4 How to Do Performance Testing Manually?

- 22.5 What is Failover Testing in Performance Testing?

- 22.6 How to Do Performance Testing for Web Applications?

- 22.7 What is KPI in Performance Testing?

What is Performance Testing?

Performance testing is the process of evaluating the speed, scalability, and stability of a software application under a variety of conditions. To ensure that a software application meets its performance requirements, it is important to understand and use the different terminologies and techniques associated with software performance testing.

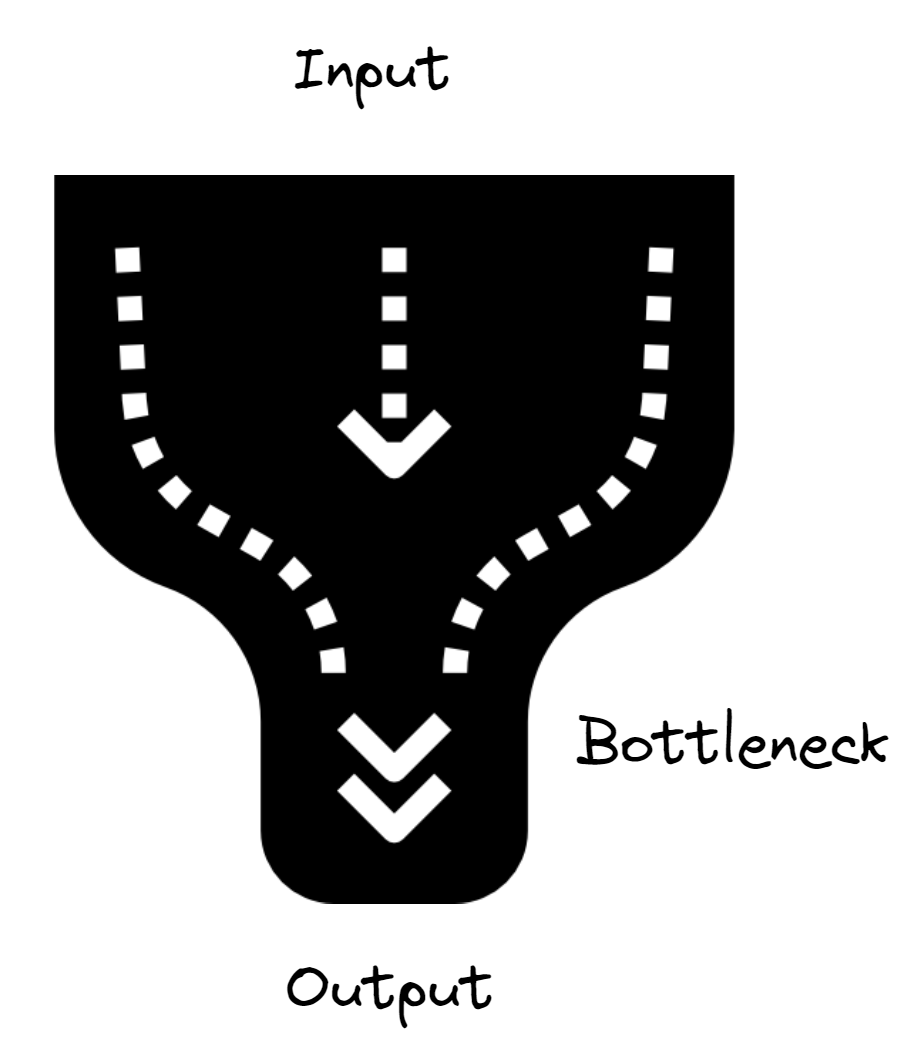

It aims to provide visibility on the potential performance bottlenecks, as well as identify any potential errors and failures. As such, it is essential to validate the production performance of these systems before release onto the live environment.

It can take many forms. For example, system performance testing evaluates the system’s overall speed and efficiency in responding to user inputs. Similarly, web application performance tests assess a web application’s response time and resource consumption when exposed to increased load from multiple sources of traffic. Load testing ensures that an application can support a large number of simultaneous users by simulating many different user requests at once. Also please remember that performance testing is a type of non-functional testing.

Performance Testing Example

Performance testing is a software testing type that evaluates how well an application performs under various conditions and user loads. It aims to identify performance issues, scalability issues, and response times to ensure the application meets performance requirements.

Example: Testing an e-commerce site by simulating a high number of concurrent users making purchases to ensure that the website’s response time, server load, and transaction processing remain acceptable even during peak traffic periods.

Why Performance Testing is Necessary?

“It takes months to find a customer… seconds to lose one.” – Vince Lombardi

The importance of application performance cannot be overstated. Performance testing is essential for ensuring that your applications are running at optimal performance and can handle the demands of your users.

It can help you identify weak points in your application that could lead to slowdowns or crashes.

It can also assist you in identifying possible bottlenecks and addressing them before they become a nuisance. By doing so, you can guarantee that your application runs smoothly and effectively, giving your users a better experience. Start with performance testing to ensure that your apps are performing properly and providing the greatest user experience possible.

In brief, performance testing is essential for businesses since it gives vital insights to guarantee that systems are running optimally, before deployment.

Objectives of Performance Testing

Software is only as popular and reliable as its performance. Before examining the performance of any product, keep these objectives in mind:

- This testing aims to identify and improve the overall functioning of the system.

- It determines how well the system can scale to accommodate increasing user loads.

- The idea behind running performance testing is to ensure that the software becomes stable and speedy for the users.

- It determines how well the application handles multiple users or processes executing concurrently, ensuring data integrity and proper synchronization.

- Testing the performance of an application evaluates the system’s ability to smoothly transition to backup or failover components in the event of hardware or software failures.

- The aim is to verify the system’s stability and performance over an extended period to detect issues related to resource leakage or degradation over time.

What Are the Characteristics of Effective Performance Testing?

Effective performance testing goes beyond simply running a few simulations and reporting results. It requires a strategic approach that ensures valuable insights and tangible improvements.

Here are some key characteristics:

1. Goal-oriented: The tests should be driven by specific goals and objectives aligned with the system’s purpose and user needs. Not just a generic “stress test,” define desired performance benchmarks and metrics to measure against.

2. Realistic scenarios: Test scenarios should mimic real-world user behavior and workloads, including peak usage times, different user types, and varying data volumes. Don’t just throw a random load at the system.

3. Extended coverage: Conduct a variety of tests beyond just load testing. Consider stress, scalability, and security testing to identify bottlenecks and vulnerabilities.

4. Continuous process: Performance testing shouldn’t be a one-time event. Integrate it throughout the development cycle, including early on with prototypes and regularly after making changes.

5. Data-driven analysis: Don’t just collect metrics; analyze them objectively to identify trends, bottlenecks, and areas for improvement. Use clear visuals and reports to communicate findings effectively.

6. Actionable insights: Translate test results into concrete recommendations for developers and stakeholders. Focus on prioritizing critical issues and implementing effective solutions.

7. Tool flexibility: Use appropriate tools that match your needs and budget. Don’t get stuck with one specific platform; adapt and utilize different tools for different testing types.

8. Continuous improvement: Monitor the system after implementing changes and re-test regularly to ensure sustained performance improvements.

Real-World Examples of Performance Testing

Below are some examples that show challenges, solutions, and root causes.

Example 1. E-Commerce Websites During Holiday Sales

Scenario: Big platforms like Amazon or Flipkart get huge traffic during events like Black Friday or Diwali sales. Millions of users browse, add products to carts, and complete transactions all at once.

Challenges:

- Servers crash because of too much traffic.

- Pages load slow, and users leave their carts.

- Payment gateway delays make transactions fail.

Mitigation Techniques:

- Run load testing to simulate heavy user traffic and find system limits.

- Use caching to lower server stress.

- Speed up database queries for quick responses.

- Use CDNs to handle traffic better.

RCA(Root Cause Analysis) Example: During a flash sale, database locks happened when inventory was updating. This slowed down transactions. To fix it, the company started using asynchronous inventory updates.

Example 2. Streaming Services During Popular Events

Scenario: Platforms like Netflix and YouTube get a lot of traffic during big events, like live sports or new movie releases.

Challenges:

- Videos buffer slowly or their quality drops when too many users join.

- Servers crash from high traffic.

- Latency makes it harder for global users to stream smoothly.

Mitigation Techniques:

- Stress test the system to find its traffic limits.

- Use adaptive bitrate streaming to adjust video quality as per network speed.

- Deploy servers in different regions to handle local traffic better.

RCA Example: A live sports stream failed in one region due to server issues. RCA found weak failover mechanisms. The team fixed it by replicating data and services on more servers.

Example 3. Banking Applications During Payday

Scenario: Apps like PayPal or online banking portals see heavy use during paydays. Users transfer funds, check balances, and pay bills.

Challenges:

- Transactions fail due to overloaded databases.

- Payments get delayed.

- Security risks increase when system performance drops.

Mitigation Techniques:

- Do endurance testing to check if systems can handle long periods of heavy traffic.

- Use database sharding to spread out the load.

- Predict high-traffic times and allocate extra resources early.

RCA Example: On payday, a banking app saw many failed transactions. RCA showed the payment gateway API was old and couldn’t handle requests. Upgrading the API and adding server power solved this issue.

Performance testing helps us prepare for these scenarios. It ensures users get a smooth experience, and companies keep their reputation safe.

When is the Right Time to Conduct Performance Testing?

Every software goes through multiple stages during the SDLC process, two of which are development and deployment. Primarily, if there is a right time to run performance testing on an application, it is during these two phases.

When working on development, testing the performance of the software focuses on various components, including microservices, web services, and APIs. The goal here is to verify the underlying elements of the application that affect its performance as early as possible.

Read about Web services vs API

Next comes the deployment step, which the software enters after getting into its final shape. Users receive the application and start using it in numbers, usually hundreds and thousands. Keeping an eye on the performance at this time is crucial. Thus, running performance tests at the last, most important stage would be the right time.

Read all about API Performance Testing

Performance Testing Life Cycle

A performance testing lifecycle involves planning, designing, executing, and analyzing performance tests. It begins with defining objectives, selecting tools, creating test scripts, and setting up test environments. Test execution involves simulating various user loads and monitoring system performance. Finally, results are analyzed to identify bottlenecks and optimize system performance.

Test Cases for Performance Testing

After getting into the theory of performance testing, we now guide you toward the common test cases you should know about.

TC01: Load test – verify that the application can handle 100 concurrent users accessing the application. The response time should not be more than 2 seconds.

TC02: Stress test – evaluate that the application handles system resources, such as CPU and memory, without crashing or exhausting hundreds of users.

Read here- Load Test vs Stress Test

TC03: Endurance test – assess system stays stable over an extended period while continuously executing user transactions for over 24 hours.

TC04: Baseline performance test – establish a baseline for typical performance metrics, including response times, throughput, and resource utilization under normal load. Read here about Baseline Testing.

TC05: Scalability test – check that the system supports gradual scaling of the number of application servers to monitor load distribution and performance.

Why Automate Performance Testing?

The short answer is to improve the agility of the process and save time doing that. But automation brings tremendous benefits to the table that can’t be simply covered in such concise responses. So, here’s a longer, descriptive answer.

Performance tests often involve simulating a large number of users or complex scenarios, which can be time-consuming and error-prone when done manually. Automation enables testers to execute tests, allowing them to identify bottlenecks, scalability issues, and performance regressions much faster.

Secondly, automation adds consistency and repeatability in this testing. Testers may inadvertently introduce variations or biases when conducting tests manually, but with automated performance tests, such occurrences are negligible. The automation process follows predefined scripts and configurations with consistency and in a reproducible manner.

What Does Performance Testing Measure? – Attributes and Metrics

The performance testing phase of the software development life cycle is vital. It assesses the performance needs of a system while keeping the end user in mind. It employs a few technical jargons as part of its routine, which we should understand and master so that we don’t feel intimidated. When you work directly with the performance team or have the chance to begin understanding this testing, the following are a few technical terms you may hear:

Non-Functional Requirements (NFR): NFRs are the list of requirements that define how a system should behave/work. This encompasses aspects such as performance, security, maintainability, scalability, and usability. They essentially give the necessary checks and balances to the functional needs. For example, Usability, Scalability related requirements are called Non-Functional Requirements.

Virtual users: A virtual user is a replica of a real user. During testing, we cannot have multiple real users, so we emulate users. The virtual user mimics an actual user by strategically navigating through the system, sending requests and collecting data at the same time.

Bottlenecks: Broadly stated, a bottleneck is a point at which an issue arises. When it comes to performance testing, a bottleneck is a resource that limits or restricts the system’s performance.

Scalability: Scalability is the capacity of a system to modify its performance and cost in response to changes in application and system processing demands.

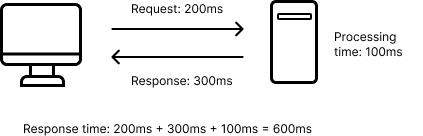

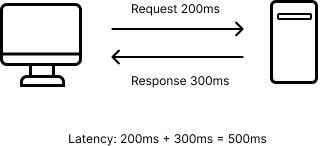

Latency: Latency is the amount of time it takes for a data packet to move from one location to another.

Throughput: Any application software’s efficiency is measured in throughput. It is calculated as the number of work requests that the program can handle in a certain amount of time. It is a crucial measurement while running a performance test on the application software that is being researched.

Response time: Response time is a measure of how quickly a system or application reacts to a user request.

Saturation: Saturation occurs when a resource is subjected to more load than it can handle. It’s the maximum utilization of that resource.

CPU Utilization: CPU utilization is the time it takes for the CPU to process/execute tasks. As we learned in school, the CPU is the computer’s brain, and this is one of the essential metrics computed during performance testing.

Memory Utilization: Memory Utilization is the memory utilized to process a request.

Concurrent Users: Multiple users log-in to the program and perform different tasks at the same time.

Simultaneous Users: Multiple users log-in to the application and do the same tasks all at the same time.

Think time: The user pauses for a time before taking each action. So, while testing with virtual users, we must account for this time while executing the scripts to simulate real-time scenarios/environments.

Peak time: Anticipated busiest time for the server is called Peak Time. The amount of requests to the server is at an all-time high. For a theme park, weekends and public holidays are peak time.

Peak Load: Peak Load is the highest expected load during the peak hours (peak time). The expected number of people at the park during evenings and holidays (peak time) is called peak load.

You won’t be as intimidated the next time you hear these buzzwords.

Types of Performance Testing

Also, there are several types, such as load testing, spike testing, endurance testing, and stress testing.

Load testing is the most common type of performance test and helps to simulate real-world traffic loads on a system or application. Load Testing is used to measure the response time for a given set of users or transactions.

Spike Testing helps determine how the system behaves when there’s a sudden increase in user requests or transactions. Spike tests measure the response time of an application when presented with unexpected bursts in traffic or usage.

Endurance (Soak) Testing evaluates the performance of an application over extended periods. Endurance tests measure how an application performs over extended periods, while stress tests measure how an application performs under extreme conditions, such as high or low temperatures or very high data volumes

Stress Testing helps identify the breaking point of an application under extreme conditions, such as high traffic or resource utilization.

Volume tests allow developers to measure the impact that large amounts of data can have on system performance.

Read all about Benchmark Testing

Cloud Performance Testing

One way for testers to carry out this testing is on the cloud. The idea of engaging the benefits of the cloud for running testing in terms of performance amounts to multiple benefits. It supports conducting the testing process at a larger scale and adds the cost benefits of working on the cloud. Yet challenges exist that need addressing.

Often, Managing and configuring cloud resources can be complex, requiring expertise in cloud platforms. Data security and compliance must be carefully addressed when using cloud resources for testing. Moreover, organizations may encounter latency issues when conducting tests from remote cloud locations. All of these issues require proper attention. In a general way, developers and testers can focus on running load testing, checking any potential security holes, and assessing the possibility of scalability.

check here – Mobile Performance Testing

Advantages of Performance Testing

Some of the key advantages are:

- This testing helps pinpoint bottlenecks, such as slow response times or resource limitations.

- By simulating increased user loads, performance testing assesses whether an application can scale to handle growing user demands.

- This testing helps identify areas where resources like CPU, memory, or bandwidth are underutilized or overutilized.

- It improves user experience and customer satisfaction.

- By proactively addressing performance issues, organizations reduce the risk of application crashes, downtime, and loss of revenue.

- This testing provides data for capacity planning, helping organizations determine the required infrastructure and resources for expected growth.

- High-performing applications enhance an organization’s reputation and brand image.

- By identifying underutilized resources, performance testing allows organizations to allocate resources more efficiently.

Disadvantages of Performance Testing

There are some disadvantages that you should know about:

- Performance testing requires significant computing resources, including hardware, software, and network resources.

- Designing, executing, and analyzing performance tests can be time-consuming, particularly for complex systems or applications.

- Simulating real-world conditions in test environments can be complex and challenging.

- Testers often need expertise and prior experience in executing performance tests with the right tools and methodologies.

- Organizations may face difficulties in scaling performance testing to match the ever-increasing complexity and size of modern software systems.

- Analyzing the results of performance tests and identifying the root causes of issues can be intricate and time-consuming.

- It may not be feasible to test all possible scenarios or user interactions, potentially missing certain performance issues.

How to Develop a Successful Performance Test Plan?

This guide will go through the many steps of creating a performance test strategy/plan, as well as the various types of tests and methodologies for developing test cases. Also, we will provide you with advice on how to develop an effective test strategy that will help you succeed in your software engineering projects.

In general, what is required for a Test Plan? I’m sure you’ve made or seen one in your career. We frequently overlook the importance of a strong test plan. A testing plan is produced in the same way as a functional testing strategy. Aside from the standard portions of a test plan, this concentrates on the questions listed below.

- What kind of performance testing is required?

- Are there any known issues with the application?

- What will be the Performance Testing Methodology?

- What are the tools used in the testing process?

- What exactly is the list of non-functional requirements (NFR)? How many of these are connected to performance?

- What data and metrics will be collected?

- What is the project’s technology stack?

- How will we document the test results?

Aside from that, understanding the general architecture of the project offers you a better grasp of how to troubleshoot or evaluate bottlenecks.

Below are a few important and standard sections in your performance test plan. Sections can be added or tweaked based on the project requirements.

- Introductions

- Project Overview

- Application Architecture

- Testing Scope (Requirements)

- Roles and Responsibilities

- Tools Installation and Config setup

- Performance Test Approach

- Performance Test Execution (Including the types of testing to be covered)

- Test Environment details

- Assumptions, Risks, and Dependencies

How to Do Performance Testing?

As you know, this testing helps evaluate how a software application performs under different conditions and workloads. This step-by-step guide will help you understand how to perform.

1. Identify the Test Environment

Identify the testing environment, production environment, and tools required for testing. Document the software, hardware, infrastructure specifications, and configurations in production and test environments to ensure test consistency.

2. Select Performance Testing Tools

Choose an appropriate testing tool that suits your application’s technology stack and business requirements. Some popular performance testing tools include Apache JMeter, NeoLoad, and LoadRunner.

3. Define Performance Metrics

Define relevant performance metrics to measure during testing, such as response time, throughput, resource utilization, and error rates.

4. Plan and Design Tests

Identify the different scenarios you want to simulate during testing, such as normal usage, peak load, and stress conditions. Also, determine the number of concurrent users, transactions, and data volumes for each scenario.

5. Create a Test Environment

Set up a testing environment that closely resembles the production environment in terms of hardware, software, and network configurations.

Configure test databases, servers, and other components as needed.

6. Execute the Tests

Run the tests and analyze and monitor the test results.

7. Resolve and Reset

Make necessary fixes to the application based on the findings. Retest the application with the same scenarios to verify that the performance improvements have been successful.

Best Practices for Implementing Performance Testing

Here are some best practices for implementing effective and impactful performance testing:

Planning and Scoping:

- Define clear objectives: Align performance testing goals with your system’s overall purpose and user needs. What are you trying to achieve?

- Identify critical scenarios: Focus on testing realistic user behavior, peak usage times, and different data volumes.

- Set performance benchmarks: Define acceptable thresholds for key metrics like response time, throughput, and error rates.

- Choose the right tools: Select tools based on your budget, system complexity, and specific testing requirements.

Test Design and Execution:

- Start early and test often: Integrate performance testing throughout the development cycle, not just at the end.

- Utilize a variety of tests: Don’t rely solely on load testing; consider stress testing, scalability testing, and security testing for a comprehensive view.

- Simulate real-world conditions: Use realistic data sets and user profiles to mimic usage patterns.

- Monitor key performance metrics: Track and analyze metrics like response time, throughput, resource utilization, and error rates throughout the test.

- Document and record everything: Keep detailed records of test configurations, results, and findings.

Analysis and Reporting:

- Analyze data objectively: Don’t jump to conclusions; identify trends and patterns in the test results.

- Prioritize critical issues: First, focus on addressing the most impactful bottlenecks and performance problems.

- Communicate findings clearly: Present your results in a way that is easily understandable for stakeholders, using visuals and reports.

- Provide actionable recommendations: Don’t just identify problems; propose solutions and prioritize them based on impact and feasibility.

Continuous Improvement:

- Monitor performance after changes: Re-test the system regularly after implementing improvements to ensure effectiveness.

- Adapt and iterate: Be flexible and adjust your testing approach based on new features, changes, and evolving user behavior.

- Make performance testing a culture: Encourage collaboration between development, testing, and operations teams to ensure performance is a shared priority.

Check here – Performance Profiling

Tips for Performance Testing

The following are some of the tips,

- Keep the testing environment from the UAT environment separate.

- Pick the best testing tool to automate the performance testing.

- Run the performance tests multiple times to accurately measure the application’s performance.

- Do not modify the testing environment until the tests end.

Performance Testing Challenges

The main Challenges are,

- Some tools support web-based applications only.

- Few free tools might not work well. Or most paid tools are expensive.

- Tools have limited compatibility.

- There are only limited tools to test complex applications.

- Organizations must look at the CPU, network utilization, disk usage, memory, and OS limitations.

- Other performance issues include long response times, load times, insufficient hardware resources, and poor scalability.

Check here – Sustainable Performance Resilience Testing

Conclusion

Convincing the stakeholders to perform this testing can be a herculean task. You can start small. Also, if possible, begin doing this as a value-added to your existing projects. And this can assist you and your team in adding a new skill to your Tester’s hat.

Being from a functional background if you were hesitant, I believe this blog has helped you to start learning and implementing this testing.

It is usual to execute performance testing too late, leaving no time for the process to offer benefits, which it will invariably do if given the time and chance.

The ultimate goal of this blog is to give you a brief idea about Performance testing. This testing and engineering is such a vast topic and an equally interesting one.

So the next steps would be,

- Research more about the topic and pick an open-source tool and get your hands dirty

- Try to implement it in your project whether it is for Web or APIs.

- Educate the team about the importance of performance testing.

Happy Performance days to you and your team!!

Frequently Asked Questions

How to Automate Performance Testing Using JMeter?

We can use Apache JMeter, an open-source tool, to automate performance testing for web applications. Testers create test plans with elements like thread groups, which represent virtual users, and HTTP samplers that define requests. JMeter helps us record user actions, parameterize inputs, and simulate various load conditions. It gives detailed reports on response times, throughput, and error rates. This helps find performance bottlenecks. We can also integrate JMeter with CI/CD pipelines. This makes performance testing continuous during development.

What is the Difference between Load Testing and Performance Testing?

Load testing is a type of performance testing. The former focuses on assessing how a system performs under expected load conditions, typically by determining if it can handle a specific number of users. On the other hand, the latter includes various types of tests, including load testing, and aims to evaluate the overall performance, scalability, and responsiveness of a system.

Is Performance Testing the Same As Functional Testing?

No, they are different but go together. Functional testing checks if the app works as expected and meets requirements. It focuses on accuracy and usability. Performance testing measures how well the app performs under load. It looks at speed, scalability, and stability. Functional testing ensures the app is correct. Performance testing ensures it works well in real-world conditions. Both are needed to deliver a strong, reliable app.

How to Do Performance Testing Manually?

In manual performance testing, we simulate user actions without using tools. Testers manually perform tasks like logging in, searching, or submitting forms. They monitor response times and server behavior. Manual testing is not ideal for handling high loads, but it works for small systems or early testing. We collect metrics using monitoring tools or server logs and analyze them for bottlenecks. Automated tests usually follow manual tests for a complete assessment.

What is Failover Testing in Performance Testing?

Failover testing checks if the app can keep working when some parts fail. We simulate things like hardware failures or server crashes. This ensures backup systems, like load balancers, work correctly. The goal is to keep the app running with minimal downtime. For example, in cloud environments, failover testing makes sure workloads shift smoothly to healthy servers. This kind of testing is key for apps that need high availability.

How to Do Performance Testing for Web Applications?

We follow several steps for performance testing web applications. First, we define goals like load capacity or response time. Tools like JMeter or LoadRunner simulate user actions and create traffic. During testing, we monitor metrics like CPU usage, memory, and response times. We analyze the results to find bottlenecks and optimize resources. Testing multiple times ensures the app meets standards before going live.

What is KPI in Performance Testing?

KPIs, or Key Performance Indicators, are important metrics in performance testing. They measure how well an app performs against set goals. Common KPIs include response time, throughput, error rates, and scalability. We use these metrics to check if the app can handle expected loads and meet user needs. By tracking KPIs, we can spot areas for improvement and ensure the app performs well.

<customlinks>

<ts-link-item>

<ts-linktext-value>Performance Testing vs Load Testing</ts-linktext-value>

<ts-linkurl-value>https://testsigma.com/blog/performance-testing-vs-load-testing/</ts-linkurl-value>

</ts-link-item>

<ts-link-item>

<ts-linktext-value>Performance Testing Tools (UAT)</ts-linktext-value>

<ts-linkurl-value>https://testsigma.com/tools/performance-testing-tools/</ts-linkurl-value>

</ts-link-item>

<ts-link-item>

<ts-linktext-value>API Performance Testing</ts-linktext-value>

<ts-linkurl-value>https://testsigma.com/blog/api-performance-testing/</ts-linkurl-value>

</ts-link-item>

<ts-link-item>

<ts-linktext-value>Load Testing vs Stress Testing</ts-linktext-value>

<ts-linkurl-value>https://testsigma.com/blog/load-testing-vs-stress-testing/</ts-linkurl-value>

</ts-link-item>

<ts-link-item>

<ts-linktext-value>Load Testing Tools</ts-linktext-value>

<ts-linkurl-value>https://testsigma.com/tools/load-testing-tools/</ts-linkurl-value>

</ts-link-item>

<ts-link-item>

<ts-linktext-value>Mobile App Performance Testing</ts-linktext-value>

<ts-linkurl-value>https://testsigma.com/blog/mobile-app-performance-testing/</ts-linkurl-value>

</ts-link-item>

<ts-link-item>

<ts-linktext-value>Fuzz Testing</ts-linktext-value>

<ts-linkurl-value>https://testsigma.com/blog/fuzz-testing/</ts-linkurl-value>

</ts-link-item>

</customlinks>