Prompt Templates for Pro-level test cases

Get prompt-engineered templates that turn requirements into structured test cases, edge cases, and negatives fast every time.

Your automation suite looked perfect in the demo. However, three months later, it has become a nightmare that breaks with every minor UI change. Your QA team is spending more time fixing tests than actually testing.

Does that sound familiar to you? You’re not alone!

While automation testing promises faster quality software releases with fewer bugs, most teams end up hitting roadblocks instead of the desired outcomes.

The problem isn’t automation itself, but the hidden issues in automated testing that catch teams off guard. From environment inconsistencies to maintenance overhead, these obstacles can turn your automation investment into a resource drain instead of a productivity boost.

The good news? Most of these challenges in automation testing are easy to handle once you know what to look for.

This guide breaks down the 17 most common test automation challenges that QA and engineering teams face. Plus, proven solutions that can turn them into opportunities for faster, more reliable, and cost-effective delivery.

Table Of Contents

- 1 1.Prioritizing test cases effectively

- 2 2.Maintaining test data and environment

- 3 3.Managing network disconnections

- 4 4.Fixing test script issues

- 5 5.Handling dynamic UI elements

- 6 6.Eliminating code smell from tests

- 7 7.Controlling up‑front and maintenance costs

- 8 8.Ensuring stability in test infrastructure and device labs

- 9 9.Selecting the right automation framework

- 10 10.Improving cross-team communication and collaboration

- 11 11.Taking real user conditions into account

- 12 12.Dealing with device and platform fragmentation

- 13 13.Integrating automation testing with CI/CD

- 14 14.Automating security checks

- 15 15.Meeting regulatory and compliance requirements

- 16 16.Analyzing and reporting results efficiently

- 17 17.Training and retaining skilled automation testers

- 18 The bottom line

- 19 Frequently Asked Questions

1.Prioritizing test cases effectively

Running every test on every build might sound safe, but it often slows you down more than it benefits you. In the race to automate everything, you end up wasting resources and time on low-risk checks while urgent bugs slip through.

Plus, it also leads to bloated test suites that take longer to run and maintain. While it can be overwhelming to decide which tests to automate, it is essential to get real value from automation.

What’s the solution?

- Prioritize repetitive, time-consuming tasks: Go with tasks that take the most of your time manually, like login flows, form submissions, or data-heavy workflows. These are ideal for automation as they run often and rarely change.

- Focus on core business scenarios: Pay attention to high-impact areas like checkout, billing or user sign-up. These are directly related to your business ROI, so even minor bugs here can lead to serious issues.

- Use risk-based tagging: Group tests by their risk level, such as critical, medium, or low. Run high-risk tests more frequently, and leave the rest for pre-release or regression cycles.

2.Maintaining test data and environment

Even the most stable test scripts can fail if the data behind them isn’t reliable or if the environment changes without warning. Many teams still use shared test data or hard-coded values, which quickly become outdated. At the same time, others struggle with test environments that behave differently from staging to production or between local and cloud setups.

These mismatches lead to false test failures and hours spent chasing debugging issues that aren’t actually bugs.

For instance, an e-commerce team noticed their cart flow tests were failing inconsistently. The issue wasn’t with the script, but it was that stale product data and expired discount codes were triggering unexpected errors. Once they automated the data updates before each run, the flakiness disappeared.

What’s the solution?

- Automate test data resets: Use scripts to refresh or restore test data before every run. This ensures that every test starts in a known, clean state and prevents failures caused by leftover or outdated data.

- Use isolated test environments: Avoid running critical tests on shared environments. Use containers or virtual machines to isolate test runs and eliminate side effects between teams.

- Keep environments close to production: Match test environments to production in terms of configurations, APIs, and data formats. The more realistic your test setup, the more reliable your results will be when it counts.

3.Managing Network Disconnections

Network disconnections are one of the common test automation challenges, especially when teams rely on remote databases, VPNs, third-party services, or APIs during test runs. Even a brief connectivity issue can block access to testing environments and delay the execution process.

In many cases, testers also struggle to access virtual machines or cloud environments due to network instability, adding further friction to automated workflows.

What’s the solution?

- Set up monitoring for network-dependent services: Implement monitoring tools to track the availability of databases, APIs, and third-party services. This helps you catch disruptions early and reduces the risk of cascading test failures.

- Use fallback or mock services: For critical flows dependent on third-party APIs, introduce mock servers or stubs to simulate responses. This ensures your tests remain reliable even when the real service is down.

- Secure and audit test infrastructure: Regularly scan your testing environment for outdated software, malware, or misconfigurations that may cause disconnection issues. Keeping your systems clean and updated reduces hidden vulnerabilities that affect test stability.

4.Fixing Test Script Issues

Writing clean scripts isn’t optional; instead, it’s what determines whether your automated testing will work or break under pressure.

If your scripts are cluttered, filled with hard-coded values, or poorly structured, they’ll start failing the moment something small changes in the application. When that happens, fixing one test often leads to breaking two more.

In addition to that, these scripts require ongoing maintenance to ensure they remain relevant as the app scales and reflect the latest functionality.

What’s the solution?

- Follow consistent naming conventions: Use clear, descriptive names for your test cases, functions, and variables. It makes scripts easier to read, debug, and hand off between team members.

- Use modular and reusable functions: Break common actions like login, form submission, or navigation into reusable components. This reduces duplication and makes updates easier when workflows change.

- Keep scripts organized and documented: Structure your test cases with clear sections and add brief inline comments where needed. When scripts are well-organized, they are much easier to maintain and expand as your test suite grows.

5.Handling dynamic UI elements

Modern applications are packed with dynamic UI elements like dropdowns, pop-ups, and AJAX-loaded content. However, these elements appear based on user actions or backend responses, and they don’t always show up instantly or behave consistently.

As a result, your automation scripts often fail when locators change or when elements take longer to load than expected.

What’s the solution?

- Use conditional wait mechanisms: Instead of adding fixed time delays, configure your tests to wait until a specific condition is met, such as an element becoming visible or clickable. This helps reduce false failures caused by varying load times.

- Choose stable and descriptive locators: Avoid fragile selectors that rely on layout structure or visual position. Use consistent attributes like unique IDs, names, or data-test tags to improve locator reliability across UI changes.

- Enable self-healing test capabilities: Some testing platforms, like Testsigma, offer self-healing locators that automatically identify and adjust to UI changes. This reduces the need for frequent script updates and helps maintain test stability over time.

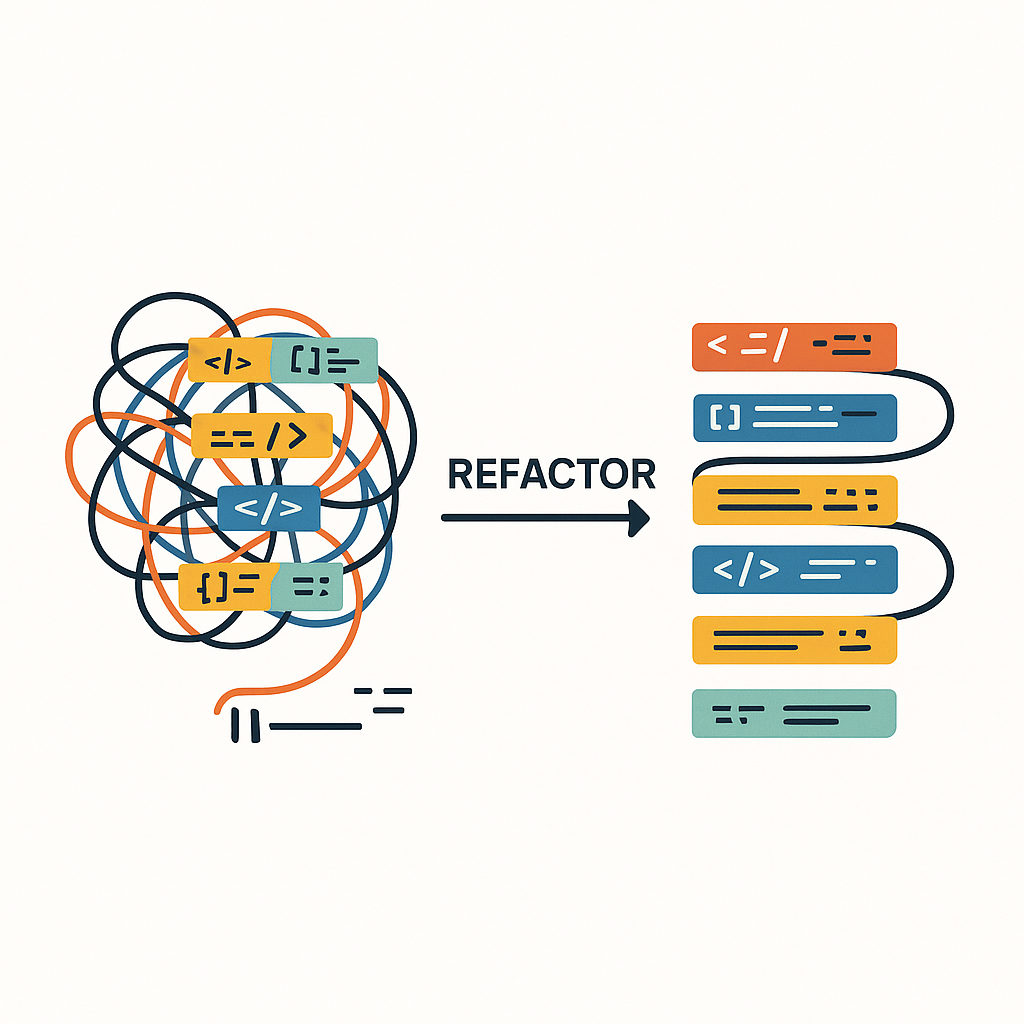

6.Eliminating Code Smell From Tests

A code smell is basically any characteristic in the code of a program that has a negative impact on the design quality. These can include overly long test cases, repeated logic, unused functions, or tight coupling between test steps.

While the test may still run, the structure becomes brittle and prone to breakage with every small application change.

What’s the solution?

- Watch out for common signs: Check and assess if your test scripts have duplicated steps, hard-coded values, overly long functions, or unclear assertions. These often indicate areas that need refactoring.

- Apply design principles like DRY and SRP: Follow coding best practices such as “Don’t Repeat Yourself” and the “Single Responsibility Principle” to improve clarity and maintainability.

- Review test scripts regularly: Schedule routine reviews to audit the quality of your test code. By performing regular cleanups, you ensure that the test suite remains stable and ready to scale as the application grows.

7.Controlling Up‑front and Maintenance Costs

Automated testing brings clear benefits, but it often comes with a higher upfront cost, which is one of the major challenges in automation testing.

You’ll need to account for licensing fees, infrastructure setup, and the time spent training your team to use the tools effectively. On top of that, there are ongoing costs tied to maintaining and updating tests as your application evolves.

Without a long-term plan, these hidden expenses can build up quickly and make automation feel more draining than helpful. But when planned well, this investment leads to stronger test coverage, better efficiency, and more confident releases.

What’s the solution?

- Start small and scale with intent: Instead of automating everything from day one, begin with a focused set of high-impact tests. This keeps initial tool and training costs low while proving value early.

- Track maintenance hours: Keep a simple log of how much time is spent fixing or updating tests. It will give you clear visibility into maintenance effort and help you spot where automation is costing more than it’s saving.

- Adopt a stable, reusable test design: Write modular scripts that can be reused across flows. The more reusable your test assets, the lower the cost of updates as the application evolves.

8.Ensuring Stability in Test Infrastructure and Device Labs

Device instability is one of the notable test automation challenges, especially in mobile testing environments. Mobile devices frequently undergo operating system upgrades, security patches, and performance shifts that can affect how tests behave.

Furthermore, if you’re using an in-house device lab, issues like wear and tear, outdated hardware, or misconfigured systems can make things worse.

Without stable and well-maintained infrastructure, test failures can stem from the lab itself rather than the application under test.

What’s the solution?

- Maintain and update physical test labs: Regularly check devices for performance issues, outdated software, and configuration mismatches. Keeping hardware and operating systems up to date reduces test flakiness and improves reliability.

- Use cloud-based device labs: Cloud testing platforms give you access to a wide range of real devices without the burden of physical upkeep. They offer better scalability and reduce the cost and complexity of setting up internal labs.

- Choose tools that support unified cross-platform testing: Use an automation solution that allows you to create and manage tests for web, mobile, and APIs in one place. This simplifies maintenance and ensures consistent results across platforms, while utilities like MacKeeper can help keep individual macOS test devices clean, secure, and performing optimally.

9.Selecting the right automation framework

With so many automation tools and frameworks available today, picking the right one isn’t just about features, but it’s about fit. A tool that works well for web apps might fall short in mobile or API testing. Even open-source options, while flexible, require significant effort to standardize, integrate, and maintain.

Any wrong choice can set you up for failure, leading to broken scripts, extensive rework, and frustrated teams.

What’s the solution?

- Shortlist what you need: Narrow down tools based on your application type and integration needs, and not on popularity. Avoid platforms that offer everything but don’t align with your actual testing workflow.

- Match tool to team skillsets: If your testers aren’t experienced in coding, choose tools that lower the technical barrier. Low-code or AI-powered testing tools let non-technical members actively contribute without needing deep scripting knowledge.

- Run a proof of concept early: Test one or two core flows on each shortlisted tool. A 2-week POC reveals real limitations and helps you compare tools based on how they work for you and not on why they work for others.

10.Improving Cross-Team Communication and Collaboration

Clear communication between developers, testers, and stakeholders is essential for successful test automation. When teams aren’t aligned or fail to share requirements properly, it can lead to redundant tests, missed scenarios, or confusion during updates. Poor collaboration also makes it harder to maintain or revise test scripts over time.

For instance, an e-commerce app team faced delays because the development and QA teams working from different locations didn’t clearly communicate payment gateway requirements. As a result, the same tests were created twice, wasting both time and effort.

What’s the solution?

- Set up communication channels: Ensure regular and open communication between teams by using collaboration tools like Microsoft Teams or Slack. When choosing a communication platform, many teams also evaluate some mainstream Microsoft Teams alternatives to ensure the tools align better with their workflows. Using them, teams can share automation updates, clarify blockers, and inform about any changes.

- Align on test requirements early: Make sure that all teams, whether it is QA, development, or product, agree on test objectives and coverage areas before any scripts are written. This reduces duplication and helps avoid gaps in testing.

- Use centralized documentation tools: Maintain a shared space to track test cases, feature requirements, and environment details. Collaborative tools like test management platforms or shared dashboards help everyone stay updated and reduce miscommunication.

11.Taking Real User Conditions into Account

Most automated tests are built for ideal conditions such as a stable network, fast devices, and error-free user behavior. But in the real world, users deal with low bandwidth, older operating systems, and unpredictable interactions. When these factors aren’t considered during testing, bugs slip through that wouldn’t show up in a perfect environment.

Take a video streaming app – it might perform well during streaming testing on high-speed internet, but buffers constantly on slower networks of your users. These gaps reduce test reliability and hurt user experience post-release.

What’s the solution?

- Simulate different network conditions: Use network throttling tools to test how your app behaves under varying bandwidths, including slow 3G or intermittent connections. This will help spot performance issues early.

- Include negative and edge-case behaviors: Automate tests that reflect real-world user mistakes like closing the app mid-process, switching tabs, or submitting incomplete forms. These cases reflect usability and stability issues that ideal scripts often miss.

- Use geolocation and timezone data: Validate how your app performs in different regions, especially if features are location- or time-sensitive. Real user conditions often vary based on geography.

12.Dealing with Device and Platform Fragmentation

Testing on just one or two devices isn’t enough anymore. With so many device models, screen sizes, and OS versions in use, it’s easy for bugs to slip through. A test that works perfectly on a desktop Chrome setup might break on an older iPhone or an Android device. These issues usually come up too late after the release, when users start reporting them.

Consider you’ve just launched a food delivery app that passed all tests internally. But users on smaller Android screens couldn’t tap the checkout button, as it was hidden below the fold. This issue went unnoticed in your initial testing simply because those screen sizes weren’t included.

What’s the solution?

- Make use of device usage statistics: Use analytics to understand which devices and browsers your users rely on. Prioritize test coverage for those top combinations instead of trying to test everything.

- Use real devices via cloud platforms: Cloud testing labs provide access to real mobile and desktop devices. These are actual hardware setups, not simulators, which help uncover issues that only appear in real-world conditions.

- Leverage cross-browser testing frameworks: Choose frameworks that support multiple browsers and platforms by design. This allows you to validate your application across different environments using a single test script.

13.Integrating Automation Testing with CI/CD

CI/CD pipelines are designed to speed up delivery, but test automation can slow them down if not implemented correctly. In fact, teams often face delays because their test suites take too long to run, scripts fail randomly, or the automation tool doesn’t integrate smoothly with their deployment setup.

Instead of catching issues early, these tests then become bottlenecks, making engineers skip them or ignore failures altogether.

What’s the solution?

- Run tests in parallel where possible: Use parallel execution to reduce total test time without sacrificing coverage. This helps fit regression or end-to-end suites into tight deployment schedules.

- Pick compatible testing tools: Choose automation tools that plug into your CI/CD setup easily and support custom triggers, test reports, and alerts so teams can act quickly on failures.

- Trigger only the necessary tests: Instead of running the full suite every time, set up logic to run tests relevant to the changes in each commit. Doing this will reduce test time and avoid unnecessary runs.

14.Automating Security Checks

Security testing is one of the most critical but also one of the hardest areas to automate. It often requires specialized tools, advanced technical skills, and the ability to simulate real-world attacks like SQL injection, XSS, or authentication bypasses.

Many QA teams aren’t equipped to design or interpret these tests, especially when faced with false positives or tool complexity.

What’s the solution?

- Use tools aligned with OWASP standards: Use security testing tools like OWASP ZAP or similar solutions that are built to simulate common vulnerabilities. These tools are widely adopted and support integration into automated pipelines.

- Automate basic vulnerability scans: Start with automated scans for high-risk areas like login flows, payment gateways, and input forms. This helps detect common threats early without needing deep manual expertise.

- Involve security experts for test planning: Collaborate with your security or DevSecOps team when designing test cases. Their input helps reduce false positives and ensures meaningful coverage.

15.Meeting Regulatory and Compliance Requirements

In regulated industries like healthcare, finance, or education, test automation goes beyond just speed and efficiency. Your tests must meet strict compliance standards around traceability, audit readiness, and comprehensive documentation. This means ensuring your automated tests align with requirements like HIPAA, PCI-DSS, or GDPR.

After all, regulators need proof that your software handles sensitive data correctly and follows essential guidelines.

What’s the solution?

- Automate audit trails and reporting: Ensure your test automation tool can log detailed execution data, timestamps, and outcomes. These logs help create reliable audit trails that support compliance checks.

- Tag compliance-critical tests: Label and group test cases related to specific regulations. This helps stakeholders quickly verify that mandatory scenarios like data encryption or access control are always tested.

- Retain test artifacts securely: Store logs, screenshots, and execution reports in encrypted or access-controlled systems to support traceability without risking sensitive data leaks.

16.Analyzing and Reporting Results Efficiently

Running hundreds of automated tests means nothing if teams can’t quickly interpret the results. If your reports are inconsistent and jumbled, you will end up spending more time decoding results than acting on them. This leads to missed bugs, false failures, and delayed releases.

In addition, it also slows root cause analysis, since testers can’t trace failures back to specific steps or inputs with confidence.

What’s the solution?

- Use visual dashboards for clarity: Adopt tools that present test results in clear, visual formats, highlighting pass/fail trends, flaky tests, and failure root causes. It will help speed up triage and decision-making.

- Set up smart alerts and filters: Configure alerts to flag high-impact failures immediately, while filtering out low-priority noise. This helps the right people to take action at the right time.

- Track recurring failures and flakiness: Use reporting analytics to identify unstable scripts or repeated issues, helping teams prioritize what to fix and improve test stability.

17.Training and Retaining Skilled Automation Testers

Modern test automation requires more than just basic scripting. Instead, it demands a solid automation team that can write scalable tests, understand frameworks, work with APIs, handle CI/CD, and troubleshoot failures quickly. However, finding talent with that skill set or training your existing team to reach it isn’t easy, making skill gaps one of the biggest challenges in automation testing.

What’s the solution?

- Focus on upskilling programs: Create a dedicated training program for new hires and manual testers transitioning into automation. Pair them with senior engineers during onboarding and offer hands-on training related to your specific tech stack.

- Make test automation a shared skill: Promote cross-functional learning between developers and testers. When developers contribute to tests and testers review code, you create a more balanced, resilient team.

- Create incentives to retain test talent: Recognize your best talent through clear KPIs, performance reviews, and career advancement opportunities. This keeps your best testers from jumping ship and your automation efforts from losing momentum.

The Bottom Line

Automation testing doesn’t have to be a constant battle against flaky tests and maintenance overhead. The challenges we’ve explored are common roadblocks that do come with solutions.

The key is approaching automation strategically rather than rushing into implementation. Start with stable, high-value test cases and invest in proper test data management. Moreover, build maintainable frameworks from day one and choose tools that reduce complexity instead of adding to it.

If you’re looking for a one-stop test automation tool to speed up test case creation and execution, try Testsigma.

Testsigma delivers unified end-to-end test automation with cloud-based execution across thousands of environments. You can create tests in plain English without scripting, get reports that pinpoint issues instantly, and reduce your setup time. Start your free trial today to explore an automation testing solution that delivers!

Frequently Asked Questions

Generally automating flaky tests are the most difficult difficulty in automation testing that generates inconsistent results or fails intermittently. These tests also takes time and effort while also eroding the trust in the process of automation.

The high initial expense of setting up automation infrastructure, as well as the necessity for experienced employees to build and manage automated tests, are two important limits for test automation. The inability to automate certain forms of testing, such as exploratory testing and usability testing, is another constraint.

To overcome technological problems during test automation, it is critical to have a thorough grasp of the technology stack being tested, as well as qualified automation engineers capable of creating robust and maintainable automation frameworks. It is also critical to employ the appropriate automation tools and technology for the job, as well as to have a well-defined testing strategy that considers the limitations of test automation.

Selenium automation faces real-time challenges such as dealing with dynamic web content, synchronization issues between the automation tool and the application being tested, and cross-browser compatibility issues. It also requires maintaining test suites and integrating automation testing into a continuous integration and delivery pipeline.