Prompt Templates for Pro-level test cases

Get prompt-engineered templates that turn requirements into structured test cases, edge cases, and negatives fast every time.

Table Of Contents

- 1 What is Autonomous Testing: Benefits, Challenges & Popular Tools

- 2 What is Autonomous Testing?

- 3 How Does Autonomous Testing Work

- 4 Key Benefits of Autonomous Testing

- 5 Use Cases of Autonomous Testing

- 6 Top 5 Autonomous Testing Tools

- 7 Implementation Steps for Autonomous Testing

- 8 Challenges in Autonomous Testing

- 9 Best Practices for Successfully Implementing Autonomous Testing

- 10 How Testsigma Can Help With Autonomous Testing

- 11 Conclusion

- 12 FAQs

What is Autonomous Testing: Benefits, Challenges & Popular Tools

The software industry is rapidly evolving to match the speed and precision of GenAI. Software testing, a critical part of development, must keep pace with this agility. Autonomous testing, powered by Agentic AI, is emerging as a promising solution that addresses key challenges for testers and organizations. Let’s explore everything about autonomous software testing.

What is Autonomous Testing?

Autonomous testing is a method of software testing where the entire testing cycle, from test creation, execution, maintenance, and defect detection, is managed by artificial intelligence (AI) and machine learning (ML). The goal of this approach is to reduce human intervention in testing so that testers can move from doing redundant tasks to a more strategic role, such as ensuring the software aligns with organizational goals and user expectations. For organizations, it significantly reduces the time required for software releases and puts them ahead of the competition.

How Does Autonomous Testing Work

Autonomous testing leverages artificial intelligence to automate and optimize various stages of the testing process. This approach minimizes manual effort while improving accuracy and test coverage throughout the software lifecycle.

- Test Planning

AI in quality assurance analyzes the software requirements, architecture, and system behavior to identify the most critical areas that need thorough testing. By prioritizing high-risk components and test scenarios, it helps reduce the reliance on human intuition and guesswork, making the planning phase more data-driven and efficient.

- Test Creation

AI automatically generates detailed test cases by understanding the system requirements and behavior patterns, enabling the creation of comprehensive test scripts and relevant test data. This not only saves time but also ensures broader test coverage by including scenarios that might be overlooked in manual test design.

- Test Case Management

The autonomous testing system organizes test cases by factors such as risk, impact, and severity to prioritize testing efforts more logically. It also eliminates redundant tests and recommends relevant test cases based on historical test runs and documentation, optimizing overall test management.

- Test Execution

Tests are run automatically by an AI-driven system, especially for repetitive tasks like regression testing, freeing testers from manual execution. The system features self-healing capabilities which adapt tests in real time if an application’s UI or logic changes, significantly reducing false failure rates.

- Debugging and Analysis

AI tools analyze test execution logs and failure reports to identify root causes of defects swiftly. By proposing potential fixes or areas of focus, this speeds up resolution times and enhances the stability and quality of the software.

- Continuous Learning

The autonomous testing system constantly learns from past test outcomes, application updates, and new data patterns to improve future testing accuracy. This ongoing learning loop allows it to adapt to evolving software landscapes and optimize testing strategies dynamically.

Manual Vs Automated Testing Vs Autonomous Testing

| Aspect | Manual Testing | Automated Testing | Autonomous Testing |

| Test Design | Designed and executed entirely by humans | Manual creation of test scripts; automated execution | AI-generated and self-updating test creation, execution, and maintenance |

| Execution | Human testers run test cases | Tests run automatically by scripts | Fully autonomous execution with adaptive self-healing scripts |

| Human Involvement | High, testers write and run tests | Moderate, testers write scripts, schedule tests | Minimal, AI drives test lifecycle with little human input |

| Test Coverage | Limited by human capacity and time | Broader coverage leveraging scripts | Extensive, covering edge cases by learning from data and usage |

| Maintenance | Manual updates and debugging | Maintenance is required for broken or outdated scripts | AI self-heals tests, adapting to UI and code changes |

| Speed | Slow, especially for regression and repetitive testing | Faster than manual testing | Accelerated testing cycles with real-time adaptability |

| Accuracy | Error-prone due to human factors | Accurate within the scripted scope | Enhanced accuracy via AI pattern recognition and anomaly detection |

| Suitability | Exploratory, usability testing | Regression, load, and performance testing | Complex test scenarios with minimal manual upkeep |

Key Benefits of Autonomous Testing

Adopting autonomous testing brings numerous advantages that transform how software quality assurance is approached. The following benefits highlight why this method is gaining traction in the testing community.

- Increased Efficiency

Autonomous testing drastically cuts down manual efforts by automating the entire test lifecycle, from creation through execution and ongoing maintenance. This allows QA teams to focus on higher-level tasks while routine testing is handled by AI.

- Faster Test Cycles

The AI-driven process accelerates the generation and running of test cases, enabling shorter release cycles without compromising on the thoroughness of testing. This speed is critical for agile and DevOps environments that demand frequent software updates.

- Self-Healing Tests

Tests created by AI can adapt themselves automatically in response to code or UI changes, avoiding the need for constant manual updates. This self-healing capability reduces interruptions and maintenance overhead, keeping test suites reliable over time.

- Broader Test Coverage

The system can perform extensive and diverse test scenarios by analyzing application behavior deeply, including edge and corner cases that manual testers might miss. This increases defect detection rates, improving product quality.

- Better Accuracy

By leveraging machine learning models, autonomous testing reduces false positives and false negatives, leading to more reliable test results. AI can detect subtle anomalies and defects with greater precision than traditional scripted tests.

- Reduced Human Dependency

QA teams are freed from repetitive and mundane testing activities, allowing them to concentrate on complex exploratory testing and other strategic activities. This not only improves productivity but also enhances job satisfaction.

- Seamless DevOps Integration

Autonomous testing tools integrate smoothly with modern CI/CD pipelines, providing real-time test feedback during every stage of development and deployment. This integration supports continuous testing and accelerates the overall software delivery process.

Use Cases of Autonomous Testing

Autonomous testing serves a wide range of purposes across different testing scenarios and industries. Here are some key use cases where it delivers the most value.

- Regression Testing

Autonomous testing runs comprehensive regression tests automatically after each code change, ensuring that existing functionalities remain stable while new features are introduced. This continuous validation helps maintain software quality without slowing down development.

- Continuous Integration/Continuous Deployment (CI/CD)

In CI/CD workflows, autonomous tests trigger automatically in pipelines, providing immediate feedback to developers on the impact of their changes. This reliable and quick testing unlocks faster, more frequent releases with confidence.

- UI/UX Testing

The self-healing nature of autonomous tests allows them to validate user interfaces even as layouts and elements change frequently. This capability is vital for maintaining UI/UX quality in rapidly evolving applications.

- API Testing

Autonomous testing verifies APIs automatically for functionality, performance, and security without relying on manual scripting. This ensures APIs meet expected standards and respond correctly to various requests and conditions.

- Performance Testing

AI-driven testing simulates real-world scenarios to conduct load and stress testing efficiently, helping identify bottlenecks and performance issues before production. This proactive approach supports robust, scalable applications.

- Security Testing

Autonomous testing performs vulnerability scans and simulates attacks to detect security weaknesses automatically. This enhances protection by continuously monitoring for potential exploits and flaws.

- Industry-Specific Applications

Different sectors use autonomous testing to meet their unique regulatory, functional, and performance requirements. For example, finance leverages it for fraud detection validation, healthcare ensures compliance and data integrity, and e-commerce benefits from rapid release cycles and peak load testing.

Beyond software quality assurance, similar agentic AI principles are also being applied in customer-facing workflows, such as an ai voice agent or an ai receptionist, where autonomous systems manage interactions, learn from behaviour

Top 5 Autonomous Testing Tools

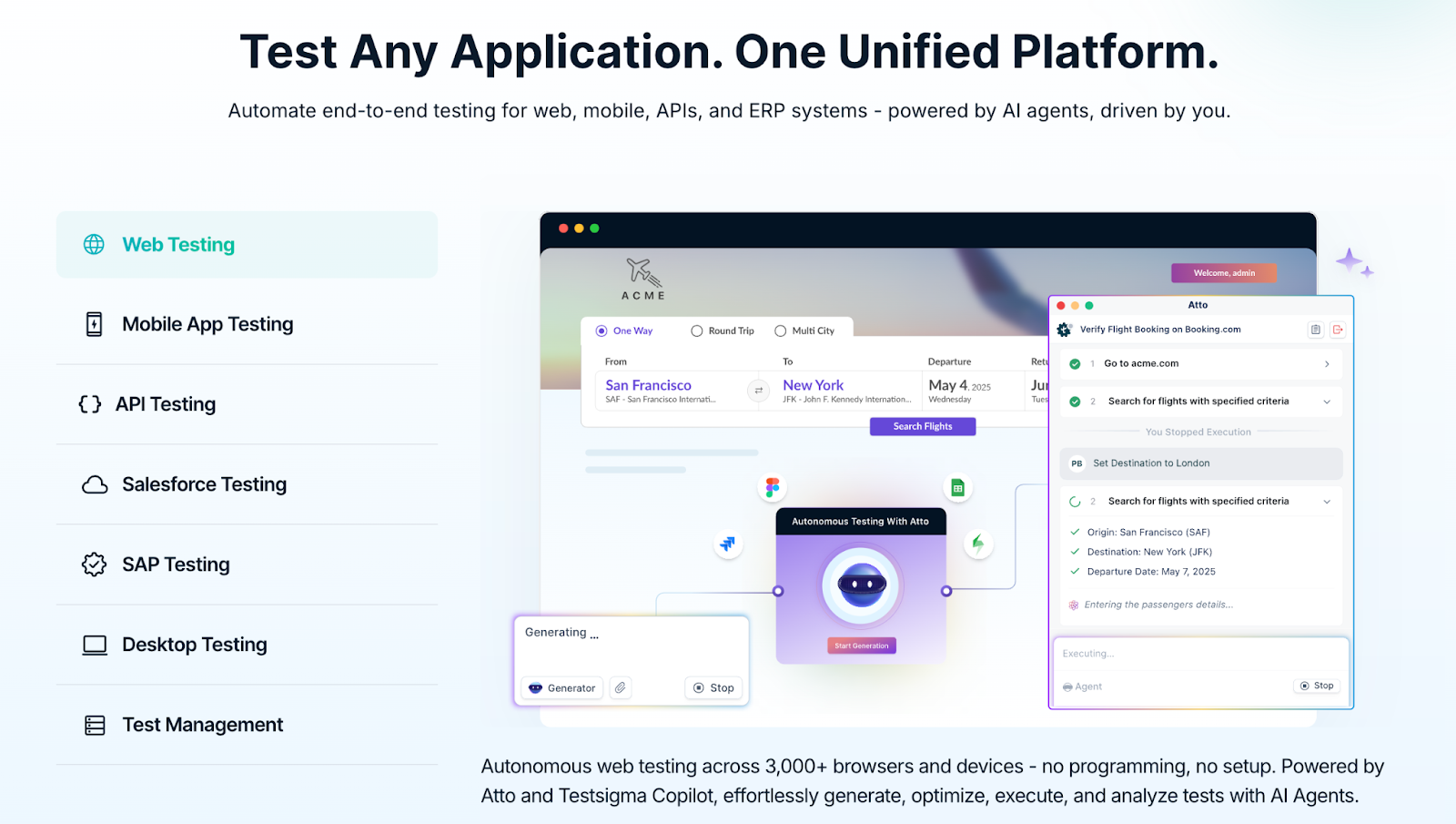

Testsigma

Testsigma is an AI-powered, codeless test automation platform designed for comprehensive testing across web, mobile, desktop, APIs, and enterprise applications like SAP and Salesforce. It features an AI coworker called Atto and multiple specialized agents that autonomously manage the end-to-end testing lifecycle — from sprint planning and test creation to execution, analysis, and bug reporting. Its self-healing capabilities and natural language scripting promote codeless test creation, enabling scalable and efficient autonomous testing in cloud environments with real device support.

Key Features:

- AI-powered Generator Agent that creates test cases automatically from plain English, Jira tickets, Figma designs, and multimedia content

- Self-healing tests that adapt automatically to UI and functional changes to reduce maintenance efforts

- Parallel test execution on 3000+ real devices, browsers, and OS combinations in the cloud

- Intelligent test optimization and execution scheduling powered by agentic AI for autonomous end-to-end workflows

Appvance

Appvance leverages AI-driven autonomous testing to cover web and mobile applications comprehensively, with additional focus on load, performance, and security testing. Its generative AI analyzes real user flows to automatically create and update regression test scripts, continuously learning and adapting to application changes. The platform’s strong self-healing capabilities reduce test fragility and maintenance, aimed at maximizing coverage of critical business workflows.

Key Features:

- AI-based creation of test scripts from natural language test cases and user behavior analytics

- Automatic self-healing of broken tests minimizing manual fixes

- Broad testing types including functional, load, performance, and security in a unified platform

- Dynamic prioritization of test scenarios based on real user activity and application risk analysis

Testim

Testim is an AI-enhanced UI and functional testing platform that provides code-free test creation and dynamic test stabilization. It uses AI-powered smart locators and stabilizers to adjust tests automatically when the application’s UI changes. Testim supports parallel cloud execution and cross-browser testing, helping teams speed up test cycles while reducing flakiness and maintenance.

Key Features:

- Visual, code-free test authoring enabling fast, easy test creation

- AI-powered self-healing locators and stabilizers to keep tests stable despite UI changes

- Parallel and cross-browser test execution for faster and broader coverage

- Cloud-based scalability to manage continuous integration and delivery pipelines

TestRigor

testRigor offers codeless autonomous testing using plain English to define test cases, making it highly accessible to testers without programming skills. It supports cross-browser and cross-platform testing with AI-driven element locators that ensure stability and reduce maintenance. Its seamless CI/CD integration helps embed autonomous testing into continuous delivery pipelines efficiently.

Key Features:

- Natural language test creation for ease of use and rapid test development

- AI-powered element locators that reduce dependency on fragile selectors

- Cross-browser and cross-platform support including desktop, web, and mobile

- CI/CD integration for continuous autonomous testing within DevOps workflows

Mabl

Mabl is an AI-powered low-code platform for UI, API, and performance testing that emphasizes ease of use and reliability. Its self-healing framework dynamically adapts test steps to UI and workflow changes, reducing manual test maintenance. Mabl integrates seamlessly into DevOps pipelines, enabling continuous testing and faster delivery cycles while using machine learning to detect anomalies and regressions early.

Key Features:

- AI-driven, low-code test creation supporting UI and API testing

- Self-healing tests that automatically adjust to UI and workflow changes

- Integration with DevOps tools and CI/CD pipelines for continuous testing

- Machine learning-based detection of anomalies, performance issues, and regressions

Implementation Steps for Autonomous Testing

Implementing autonomous testing requires careful planning and incremental adoption to ensure maximum success. The following steps outline a practical approach to incorporating autonomous testing into your QA processes.

Step 1: Assess Current Testing Process

Start by analyzing your existing testing workflows to identify bottlenecks, repetitive tasks, and areas that would benefit most from automation and AI-driven approaches. This initial assessment helps define clear goals for autonomous testing adoption.

Step 2: Select an Autonomous Test Platform

Choose a platform that supports AI-enabled test generation, execution, self-healing capabilities, and seamless integration with your existing DevOps and CI/CD tools. The right platform should balance technical features with ease of use for your teams.

Step 3: Prepare Test Environment and DATA

Establish stable test environments and gather high-quality test data to train the AI models effectively. Accurate and representative data is vital to enabling the AI to create reliable and meaningful test cases.

Step 4: Implement Gradually

Begin by automating critical or repetitive test scenarios such as regression or smoke tests, then gradually expand the scope as your confidence and expertise with autonomous testing grows. This phased approach reduces risk and fosters adoption.

Step 5: Train Teams and Adapt Processes

Invest in upskilling your testing teams to work effectively with autonomous testing tools and adjust established QA workflows to accommodate AI-driven processes. Collaboration between testers and AI enables better results.

Step 6: Monitor and Optimize

Continuously track the performance of your autonomous tests, reviewing metrics like test coverage, false positives, and execution times. Use these insights to fine-tune the test suites and improve AI models for better accuracy.

Step 7: Scale and Integrate

Once maturity is achieved, fully embed autonomous testing within your CI/CD pipelines and broader software development lifecycle. This integration supports continuous testing practices and accelerates delivery cycles.

Challenges in Autonomous Testing

Here are some of the limitations and challenges in using and implementing autonomous testing:

- Initial Set-up Cost: Requires AI/ML tools, cloud infrastructure, and computing resources, which can be expensive. Most autonomous testing tools follow a subscription-based model, making them more suitable for larger enterprises.

- Skillset: A team with expertise in testing and AI, ML, and data science mechanisms is needed. They should also learn to use the new tool.

- Integration: Integrating with existing DevOps pipelines might require some customization.

- Test Data Management: Generating quality and diverse test data while maintaining data privacy and compliance can be difficult.

- AI Model Training: The AI model must be continuously trained to evolve with the changes in the application.

- Understanding Test Results: Interpreting the outputs of tests can be challenging, especially when they fail.

Autonomous testing is still an evolving process, and these challenges are expected to be addressed in the near future.

Best Practices for Successfully Implementing Autonomous Testing

Here is a strategic approach for the smooth implementation of autonomous testing:

- Decide Your Goal: Find out what you want to achieve, such as faster testing, improved test coverage, or reduced maintenance efforts.

- Choose the Right Tool: Compare different AI testing tools for automation, considering your requirements, budget, and compatibility with your existing systems, and select the best fit.

- Start with a Pilot Project: Begin with a small-scale project as a trial to identify possible difficulties before scaling to a larger, full-scale project.

- Train the Team: Make sure that your team is familiar with autonomous testing, AI/ML concepts, and the tool in use. Provide necessary training if required.

- Use Quality Test Data: Ensure diverse and high-quality data is available for training the AI model, as the accuracy of the output depends on it.

- Train AI Model: Focus on training the AI model with effective methods like feedback loops, adaptive learning techniques, etc.

- Integration with CI/CD Pipeline: The tool should be integrated with the CI/CD pipeline for immediate feedback on code quality.

- Compatibility with Other Platforms– The tool should be able to run tests across different browsers and devices.

- Maintenance: The test scripts should be reusable and easy to maintain.

- Reports and Analytics: There should be adequate reporting features to analyze test results and find areas of improvement.

- Monitor Performance: Continuously analyze the performance of the tool and the value it is bringing to your processes, such as saving time, improving accuracy, etc.

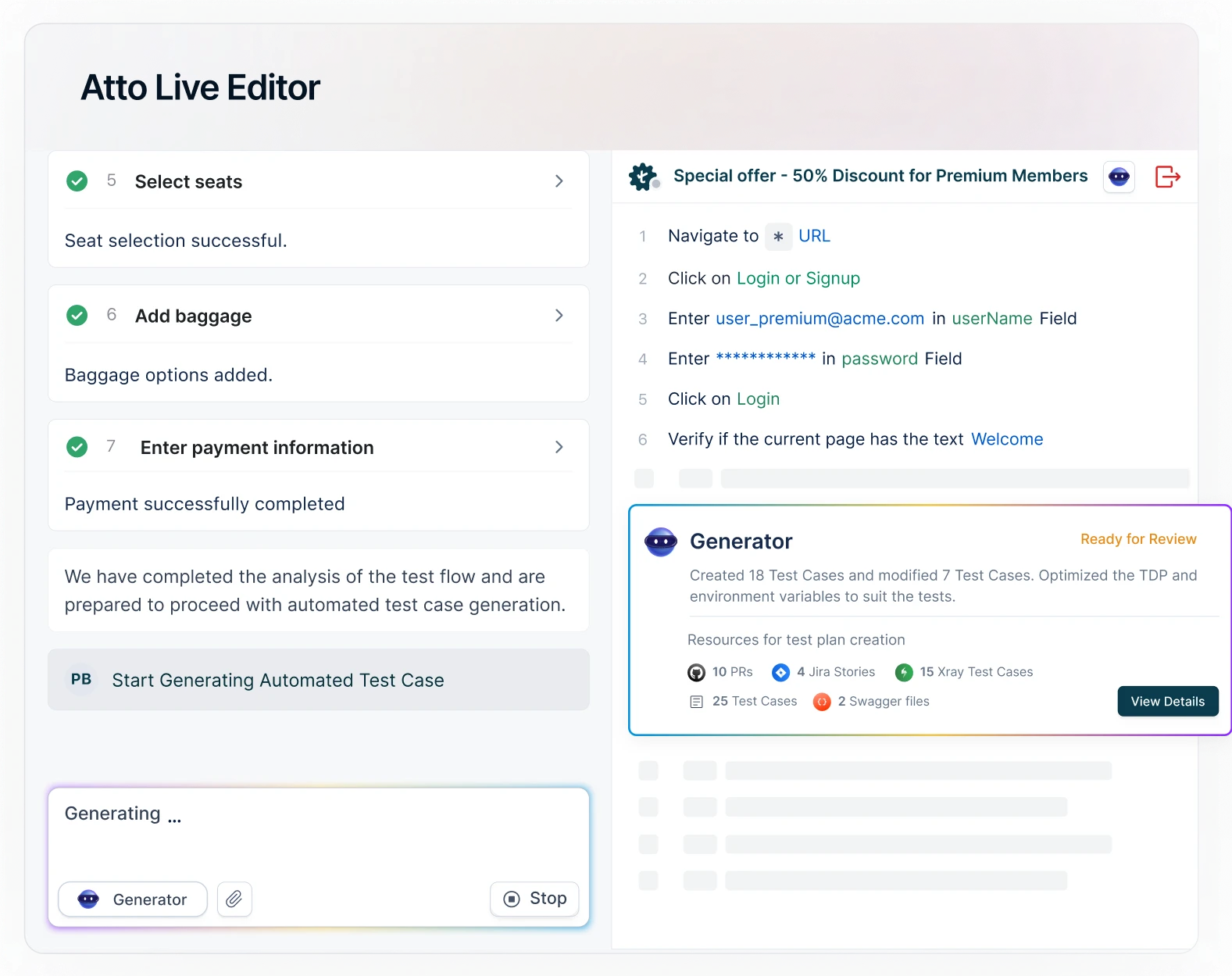

How Testsigma Can Help with Autonomous Testing

Testsigma is an AI-powered, codeless test automation platform designed to streamline testing across mobile, web, desktop, APIs, SAP, and Salesforce applications. At the heart of Testsigma’s autonomous testing capabilities is Atto, an AI coworker, supported by specialized agents like Sprint Planner, Generator, Executor, Optimizer, Analyzer, and Bug Reporter. Each agent handles key phases of the testing lifecycle, such as sprint planning, test case creation, execution, optimization, analysis, and bug reporting, minimizing manual effort while enabling your team to focus on strategic, business-critical testing that drives software quality.

Key Features of Testsigma

No-Code Test Creation

Write test cases in plain English or let the Generator Agent create them automatically from Jira tickets, Figma designs, images, product walkthrough videos, screenshots, PDFs, and other file formats. This approach removes the complexity of scripting and accelerates test development.

Cross-Browser and Cross-Device Testing

Run your tests across more than 3000 real devices, browsers, and OS combinations in the cloud. This eliminates the need for maintaining physical device labs or complex environment setups, while delivering 100% test coverage.

Parallel Testing

Execute multiple tests simultaneously across different environments to speed up feedback cycles. This helps teams maintain quality without slowing down releases.

Real-Time Test Reporting

Monitor test progress, detect bugs early, and track test outcomes easily through intuitive dashboards and detailed reports. This visibility supports faster decision-making and efficient issue resolution.

Scalability for Teams of Any Size

Whether you’re a small team or a large enterprise, Testsigma’s intelligent AI agents for software testing handle increased testing workloads without the need to grow your team significantly, enabling efficient scaling as demands evolve.

Conclusion

Autonomous testing is no longer a buzzword, but it’s a reality powered by Agentic AI. While challenges exist, advancements in AI, self-healing mechanisms, and CI/CD integration will drive its adoption further. As the industry moves towards automation-driven quality assurance, autonomous testing will play a key role in ensuring faster, more reliable software releases.

FAQs

No, autonomous testing will reduce reliance on manual testing but will not eliminate the need for testers. Instead of performing repetitive tasks, testers will take on more strategic roles, focusing on complex, business-oriented testing. Their responsibilities will include overseeing software quality, ensuring a seamless end-user experience, and addressing broader testing challenges.

Autonomous testing uses AI and ML algorithms to analyze the functionality of the applications, adapts to new behaviors, and updates test cases on its own.

Autonomous testing leverages AI algorithms such as machine learning (ML) for pattern recognition, natural language processing (NLP) for test case generation, computer vision for UI testing, and reinforcement learning for self-improving test automation.